Most of us now use AI tools like ChatGPT on the job.

However, a recent update—GPT’s Controversial Searchability—sparked global concern after it made some shared GPT chats publicly searchable through search engines.

The feature made it possible for shared GPT conversations to appear in search engine results, raising red flags about privacy, data security, and client confidentiality.

While the issue affects users around the world, it carries extra risks for Filipino remote workers who often handle sensitive client data.

Let’s dive in.

GPT’s Controversial Searchability Feature Explained

The searchability feature allowed GPT chats (especially those shared via links) to appear in search engine results like Google.

Once the feature was live, search engines could list GPT chats that users had shared via public links.

While it aimed to make helpful content easier to find, many users didn’t realize that sharing a GPT chat link could make sensitive conversations publicly searchable.

What the Feature Was Designed to Do

The feature was designed to make helpful GPT chats easier to discover online.

For example, if someone shared a link to a GPT bot or conversation, that link could show up in search results.

This was meant to benefit creators and educators who wanted to share AI tools or prompt examples.

However, the update unintentionally contributed to the GPT search controversy, as users were not fully aware that shared links could be indexed and seen by anyone and everyone.

The Unintended Consequences for Users and Businesses

Problems arose when users didn’t fully understand how the feature worked – and the extent to which the links would be shared.

Thus, some unknowingly shared chats that contained:

- Personal information

- Client-related details

- Sensitive work content

This sparked AI search privacy concerns among individuals and companies alike, especially in industries where confidentiality is non-negotiable.

For remote workers handling reports, financials, or customer data, these leaks were more than just tech slipups—they were tantamount to potential breaches of contract.

Why It Sparked Widespread Privacy Concerns

The main issue was the lack of awareness. Many users thought sharing a GPT chat link was private unless they made it public themselves.

However, even shared links—if indexed—could show up in Google or other search engines.

This led to rising fears about GPT data scraping concerns, AI search engine ethics and how much control users really had over what they shared.

Due to the backlash, OpenAI content blocking measures were introduced to prevent these shared conversations from being indexed again.

Public Backlash and Global Industry Response

When people found out that their GPT chats could appear in Google search results, it caused a global stir.

Many thought their shared chats were private unless made public on purpose.

This misunderstanding sparked the GPT web indexing debate and led to a wave of concern over AI privacy and transparency.

Impact on AI Policy and User Trust

This issue added pressure on lawmakers to create or update rules around AI, especially in response to rising concerns about data exposure and the AI search regulation gap.

In many countries, conversations around digital privacy and AI use have become more serious.

New policy discussions focused on:

- How long AI tools store data

- Whether users know how their data is used

- Making sure AI-generated work is labeled clearly

As a result, many users became more careful. Remote workers, in particular, began avoiding personal or client-related prompts in AI tools—or used safer, offline versions.

What It Means for Future AI Development

This controversy may change how AI tools are built. It may push companies to focus on privacy and control, not just convenience.

In response to ongoing concerns about GPT search transparency and ChatGPT search limitations, we’ll likely see:

- More built-in privacy settings by default

- Clear options to keep chats private or temporary

- AI tools with “work-safe” modes that avoid saving data

- Better ways to review and control AI activity logs

For remote professionals, this means greater control. It also brings more responsibility to protect sensitive data and stay informed about the tools you use.

How GPT Searchability Affects Remote Workers and Freelancers

For Filipino freelancers and remote workers, GPT tools help with daily tasks like writing, summarizing, or translating.

However, the searchability issue raised an important question: Are we entrusting AI with much?

This issue isn’t just about data leaks.

It’s about how GPT affects your workflow, client trust, and long-term professional reputation in a world where GPT and SEO implications are becoming increasingly intrusive in direct proportion to their usefulness.

Risks to Client and Company Confidential Information

Many online workers use ChatGPT to draft or polish documents for clients.

While this saves time, it also begs a critical question: when does AI-enabled productivity start putting sensitive data at risk?

Here are some sample scenarios:

Note: Real names have been withheld upon request.

Case 1: The Client’s Name That Shouldn’t Have Been Made Public

Carlo, a part-time content writer from Manila, used GPT to brainstorm blog headlines for a new campaign.

He included the client’s brand name, launch date, and internal theme instructions in the prompt.

A week later, his client Googled their brand name—and found Carlo’s GPT prompt indexed in the results.

They were furious that confidential marketing plans were exposed before launch, and Carlo was asked to sign off all future AI usage with legal clearance.

Case 2: The NDA Violation via Innocent Copy-Paste

Jessa, a remote admin assistant, signed an NDA with a U.S.-based company. She used GPT to improve the clarity of internal training materials and shared the final doc with her manager.

However, she had copied chunks of the original doc (including real employee names) into GPT.

A cybersecurity audit later revealed that the prompt had been indexed. Her client considered this a breach of contract, and she faced legal consequences despite having no malicious intent.

Case 3: The Virtual Assistant and the Exposed Proposal

Anna, a freelance VA from Davao, used GPT to rewrite a detailed business proposal sent by a client.

She shared the final output with the client, but unknowingly kept the original prompt (which included sensitive pricing tiers and internal strategy) in her GPT history.

A few weeks later, she noticed that the prompt was searchable on Google due to a shared link.

Her client wasn’t pleased to learn their confidential pitch could be found online, and Anna lost the contract.

These aren’t just technical risks, they’re professional risks.

If a client’s info is compromised, they may question your ability to work independently or securely—even if the leak isn’t technically your fault.

How AI Prompts Could Accidentally Leak Proprietary Data

Another hazard that many don’t realize is that the prompt itself can contain sensitive content.

Even a few keywords can give away:

- Business strategies (“Write a pitch for our new fitness app launching next month”)

- Project scope (“Summarize our SEO plan for Q4 including link-building and Google Ads”)

- Events or developments best kept under wraps (“Client was unhappy about the billing error; draft an apology email”)

If you use AI tools as part of your remote work process, these prompts can reveal more than you think.

Moreover, if they’re searchable, it’s not just your work that’s exposed, it could be your client’s operations.

The Career Risks of a Compromised GPT Chat History

As a freelancer or remote worker, your reputation is your brand.

Unlike traditional office-based employees, you don’t have a legal team or IT department backing you up. One leaked chat, even by accident, can lead to:

- Terminated contracts

- Negative reviews or feedback on freelancer platforms

- Lost referrals or damaged trust in client circles

If you’re using GPT at work, you may need to explain how you manage privacy risks like this one when pitching to prospective clients as well.

Especially now that these GPT search engine indexing issues have made that conversation more urgent than ever.

Can Your GPT History Be Used as Court Evidence?

Another emerging concern goes beyond privacy—legal exposure and consequences.

Many thought AI chats were private, but the recent searchability scandal proved otherwise.

In some cases, your GPT chats can even be used as official evidence in court.

Legal Precedents for Digital Data in the Philippines

In the Philippines, digital data like emails, chat logs, and AI-generated content can be used as court evidence under the Rules on Electronic Evidence (A.M. No. 01-7-01-SC) and the Electronic Commerce Act (R.A. 8792).

This means your GPT chats could be accepted as legal evidence in court if they are relevant, real, and were legally obtained.

Courts, including the Supreme Court, have ruled that screenshots, chat logs—even from personal accounts—can help establish proof in both civil and criminal cases.

For example, in People v. Rodriguez (G.R. No. 263603), the Supreme Court accepted chat logs as valid evidence to support a criminal charge.

This adds another layer of caution for remote workers, especially in light of the ChatGPT web crawler debate, where unintended public sharing of AI chats raised concerns about privacy, ownership, and legal exposure.

It also deepens the AI-generated content controversy, as the line between personal and professional use becomes blurrier.

So if a GPT prompt or response played a role in a work-related problem, it might be used in court—just like any written document.

How AI Chat Logs Could Be Subpoenaed

Your GPT history can be requested in court if:

- It includes client instructions linked to a dispute.

- It contains evidence of possible NDA violations or mishandling of private data.

- There’s an ongoing investigation into copyright, plagiarism, or cybercrime.

- A client or employer needs proof of AI use or copied work.

Even if you delete your chats, older versions or shared links might still be recovered by legal or tech experts.

Implications for Freelancers and Contractual Workers

For Filipino remote workers, especially freelancers and contractors, this brings up three key concerns:

Accountability

It’s best to be up front about GPT use from the beginning.

You could be accused of misrepresentation or breaking your contract, especially if the work was supposed to be original.

Data Handling Risks

Typing sensitive details like passwords, client emails, or project info into GPT might be construed as a breach of client confidentiality.

Legal Vulnerability

Freelancers don’t always have legal help or HR support like full-time employees. If a client files a complaint tied to AI misuse, it can be harder to defend yourself.

What to Do if Your GPT History at Work Got Compromised

If a GPT conversation you shared (intentionally or accidentally) contains sensitive data, act fast to regain control and limit exposure.

Immediate Steps (Changing Credentials, Informing Employer/Client)

- Update passwords or credentials mentioned in the compromised prompt or response—assume they’re no longer safe.

- Notify the affected client or employer immediately. Transparency and prompt action can preserve trust.

- Delete or disable the shared GPT link—right away. Even if it was indexed, disabling the link prevents it from being accessed further.

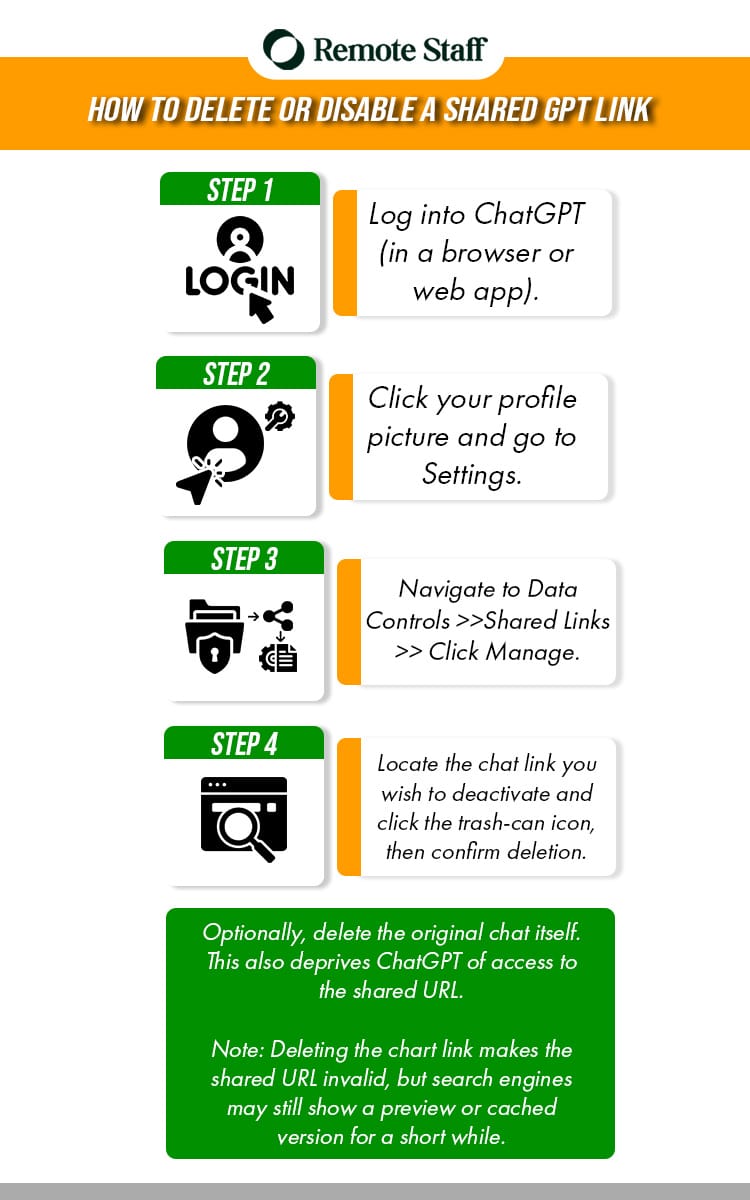

How to Delete or Disable a Shared GPT Link

To remove access or prevent others from viewing a shared GPT conversation:

- Log into ChatGPT (in a browser or web app).

- Click your profile picture and go to Settings.

- Navigate to Data Controls → Shared Links → Click Manage.

- Locate the chat link you wish to deactivate and click the trash-can icon, then confirm deletion.

Optionally, delete the original chat itself. This also deprives ChatGPT of access to the shared URL.

Note: Deleting the chart link makes the shared URL invalid, but search engines may still show a preview or cached version for a short while.

Clicking it, however, typically leads to a 404 “page not found.”

Reviewing Saved AI Prompts for Sensitive Data

Go through your ChatGPT history and identify any conversations that mention:

- Names or emails

- Client or company information

- Project details, pricing, or internal strategies

If you find any sensitive content:

- Make a list of what’s been exposed.

- Let your client know exactly what might have been accessed.

- Offer to rework or replace affected deliverables if needed.

Best Practices for Future Use (Separate Accounts, Prompt Hygiene)

To avoid future exposure:

- Use separate ChatGPT accounts: one for personal use and another for work tasks.

- Avoid including real client data in prompts. Use placeholders like Client A or Project X instead.

- Clear chat history regularly after completing client-related work.

- Always review before creating or sharing any GPT link, and avoid sharing it unless you’re absolutely sure it contains no sensitive info.

- Consider using features like Temporary Chat, which don’t save history, for highly confidential or sensitive conversations.

- Use company-approved AI guidelines. Follow any official rules or client instructions on GPT use. When in doubt, always, always ask and don’t proceed without clear instructions.

- Secure accounts and use encrypted connections. Always log in using secure networks (HTTPS), enable two-factor authentication, and keep login credentials private.

Frequently Asked Questions (FAQs)

Got concerns about using GPT for work? You’re not alone, especially with rising issues around GPT’s Controversial Searchability.

Here are quick answers to some of the most common questions from Filipino freelancers and remote workers:

Does GPT permanently store my data?

Not always—but it can.

GPT may temporarily store your chats to improve its services unless you disable chat history or use features like Temporary Chat.

Publicly shared links, however, remain accessible unless manually deleted.

Why are people suddenly concerned about AI privacy?

A: The recent GPT content discovery issues highlighted how AI chats might not be as private as we thought.

These concerns triggered debates about how searchable and permanent AI prompts actually are.

How can I delete my GPT conversation history?

Go to Settings > Data Controls > Manage My Data in your ChatGPT account.

From there, you can delete individual conversations or clear all history.

You can also disable chat history entirely to prevent future logs from being saved.

Can I still use GPT safely for client work?

Yes, but with care. Stick to company-approved guidelines, avoid inputting sensitive or personal data, and never assume chats are completely private.

Treat every prompt as if it could become public later.

What should I do if a client is concerned about GPT privacy?

Be transparent about the extent of your AI use.

Brief your client on how you use GPT, what safety steps you take, and reassure them that no private data is entered into the tool.

Offer to use Temporary Chat or avoid GPT altogether if they request it.

Conclusion: Empowering Remote Workers to Use GPT Responsibly

AI tools like GPT can make remote work faster and easier, but they also raise serious risks around privacy, security, and legality.

For Filipino freelancers and remote professionals, it’s important to treat AI conversations like official work documents.

Understand what you’re typing, where it’s stored, and how it might be used, especially in light of GPT’s controversial searchability incident.

Simple actions like avoiding sensitive data, following client policies, and using secure accounts can go a long way.

At Remote Staff, we support safe and ethical AI use. We work with clients who value data privacy and provide remote professionals with clear tasks, secure tools, and consistent communication.

This helps minimize legal or data-related risks and empowers responsible and productive use for both parties involved.

Looking for stable online jobs with supportive clients?

Sign up with Remote Staff today and access remote jobs that value both innovation and integrity.